Baseline results of the IPN Hand Dataset

Tests are divided into isolated and continuous hand gesture recognition.

If you want your results to be included here, please send the information (method, reference & raw results) to gibran@ieee.org.

Isolated Hand Gesture Recognition

We segment all testing videos into isolated gesture samples based on the beginning and ending frames manually annotated. The learning task is to predict class labels for each gesture sample. We use classification accuracy, which is the percent of correctly labeled examples, and the confusion matrix of the predictions, as evaluation metrics for this test.

| Ref. | Model | Input | Modality | Results | Inference* |

|---|---|---|---|---|---|

| [1] | ResNeXt-101 | 32-frames | RGB-Flow | 86.32 % | 50.8 ms |

| [1] | ResNeXt-101 | 32-frames | RGB-Seg | 84.77 % | 37.4 ms |

| [2] | ResNeXt-101 | 32-frames | RGB | 83.59 % | 27.7 ms |

| [3] | C3D | 32-frames | RGB | 77.75 % | 76.2 ms |

| [1] | ResNet-50 | 32-frames | RGB-Seg | 75.11 % | 25.9 ms |

| [1] | ResNet-50 | 32-frames | RGB-Flow | 74.65 % | 39.9 ms |

| [3] | ResNet-50 | 32-frames | RGB | 73.10 % | 18.2 ms |

*Inference time measured in a single NVIDIA GTX 1080ti GPU

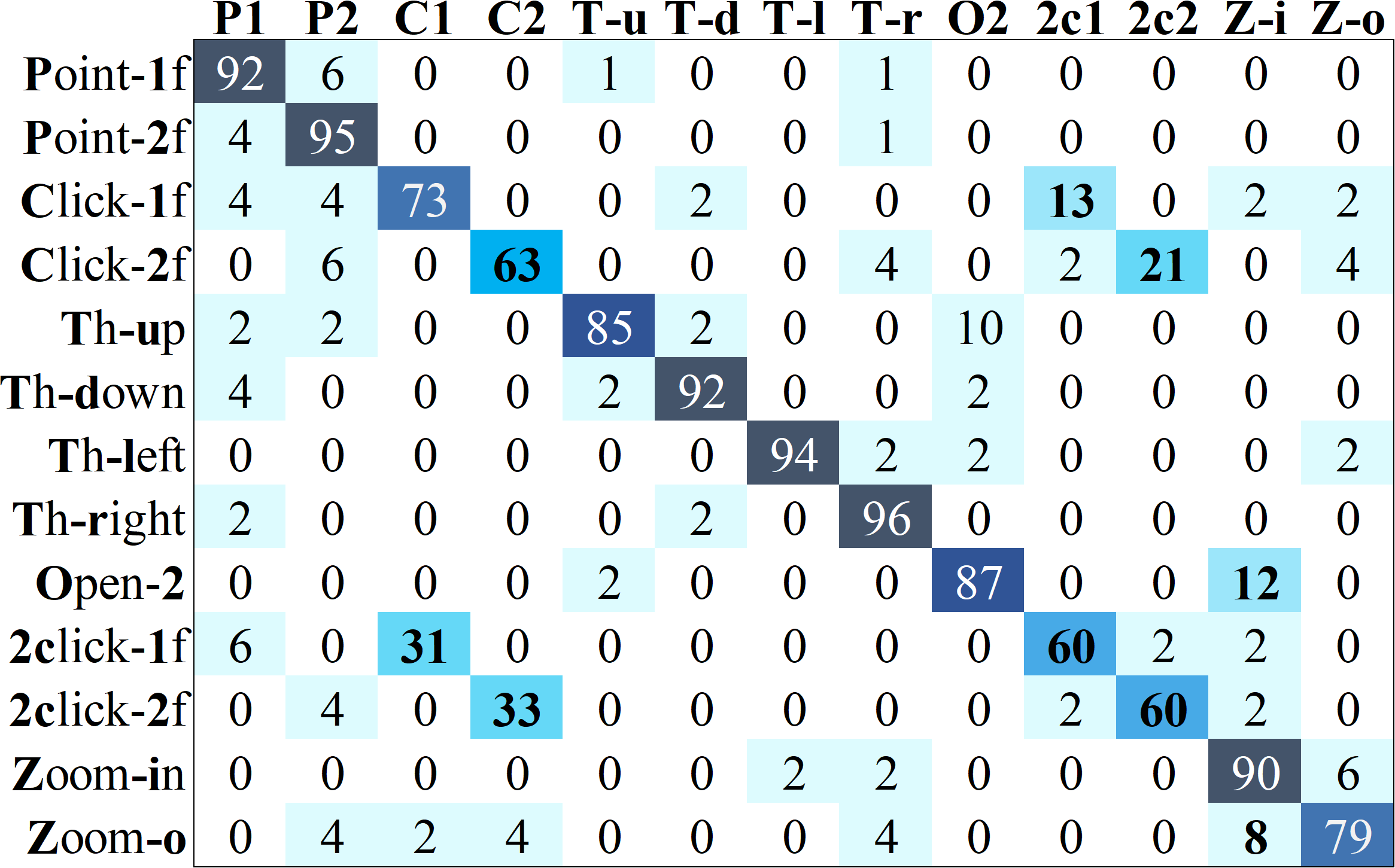

Confusion matrix of the best result [1] using ResNext-101 model with 32-frames RGB-flow:

Continuous Hand Gesture Recognition

We use the Levenshtein accuracy [3] as evaluation metric for this test.

This metric employs the Levenshtein distance to measures the distance between sequences by counting the number of item-level changes.

The difference between the sequence of predicted and ground truth gestures is measured.

For example, if a ground truth sequence is [1,2,3,4,5,6,7,8,9] and predicted gestures of a video is [1,2,7,4,5,6,6,7,8,9], the Levenshtein distance is 2.

Thus, the Levenshtein accuracy is obtained by averaging this distance over the number of true target classes.

In the example, the accuracy is 1-(2/9)x100 = 77.78%.

| Ref. | Model | Input | Modality | Results | Inference* |

|---|---|---|---|---|---|

| [1] | ResNeXt-101 | 32-frames | RGB-Flow | 42.47 % | 53.7 ms |

| [1] | Resnet-50 | 32-frames | RGB-Flow | 39.47 % | 43.1 ms |

| [1] | ResNeXt-101 | 32-frames | RGB-seg | 39.01 % | 39.9 ms |

| [1] | Resnet-50 | 32-frames | RGB-seg | 33.27 % | 29.2 ms |

| [3] | ResNeXt-101 | 32-frames | RGB | 25.34 % | 30.1 ms |

| [3] | Resnet-50 | 32-frames | RGB | 19.78 % | 20.4 ms |

*Inference time measured in a single NVIDIA GTX 1080ti GPU

References

[1] G. Benitez-Garcia, et al., IPN Hand: A Video Dataset and Benchmark for Real-Time Continuous Hand Gesture Recognition, in ICPR 2020. [code]

[2] D. Tran, et al., Learning spatiotemporal features with 3d convolutional networks, in CVPR 2015.

[3] O. Kopuklu, et al., Real-time hand gesture detection and classification using convolutional neural networks, in FG 2019. [code]